Alibaba’s Qwen Team, a division tasked with developing artificial intelligence (AI) models, released the QwQ-32B AI model on Wednesday. It is a reasoning model based on extended test time compute with visible chain-of-thought (CoT). The developers claim that despite being smaller in size compared to the DeepSeek-R1, the model can match its performance based on benchmark scores. Like other AI models released by the Qwen Team, the QwQ-32B is also an open-source AI model, however, it is not fully open-sourced.

QwQ-32B Reasoning AI Model Released

In a blog post, Alibaba’s Qwen Team detailed the QwQ-32B reasoning model. QwQ (short for Qwen with Questions) series AI models were first introduced by the company in November 2024. These reasoning models were designed to offer an open-source alternative for the likes of OpenAI’s o1 series. The QwQ-32B is a 32 billion parameter model developed by scaling reinforcement learning (RL) techniques.

Explaining the training process, the developers said that the RL scaling approach was added to a cold-start checkpoint. Initially, RL was used only for coding and mathematics-related tasks, and the responses were verified to ensure accuracy. Later the technique was used for general capabilities along with rule-based verifiers. The Qwen Team found that this method increased general capabilities of the model without reducing its math and coding performance.

![]()

QwQ-32B AI Model benchmarks

Photo Credit: Alibaba

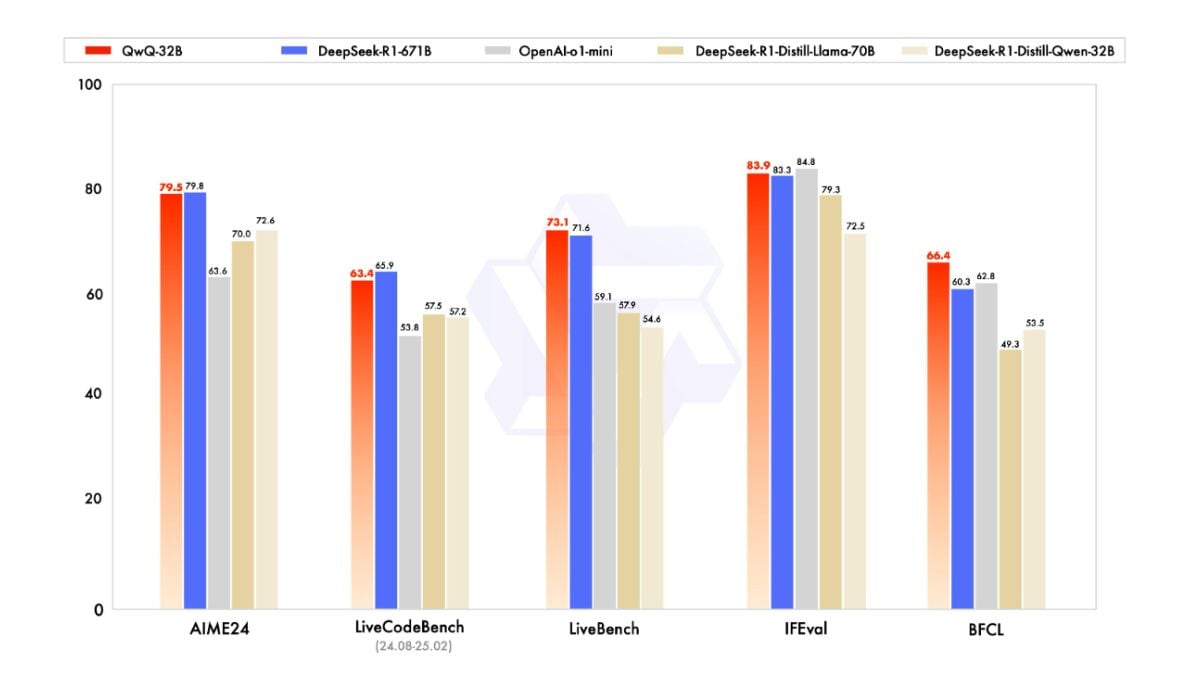

The developers claim that these training structures enabled the QwQ-32B to perform at similar levels to the DeepSeek-R1 despite the latter being a 671-billion-parameter model (with 37 billion activated). Based on internal testing, the team claimed that QwQ-32B outperforms DeepSeek-R1 in the LiveBench (coding), IFEval (chat or instruction fine-tuned language), and the Berkeley Function Calling Leaderboard V3 or BFCL (ability to call functions) benchmarks.

Developers and AI enthusiasts can find the open weights of the model on Hugging Face listing and Modelscope. The model is available under the Apache 2.0 licence which allows academic and research-related usage but forbids commercial use cases. Additionally, since the full training details and datasets are not available, the model is also not replicable or can be deconstructed. DeepSeek-R1 was also available under the same licence.

In case one lacks the right hardware to run the AI model locally, they can also access its capabilities via Qwen Chat. The model picker menu at the top-left of the page will let users select the QwQ-32B-preview model.