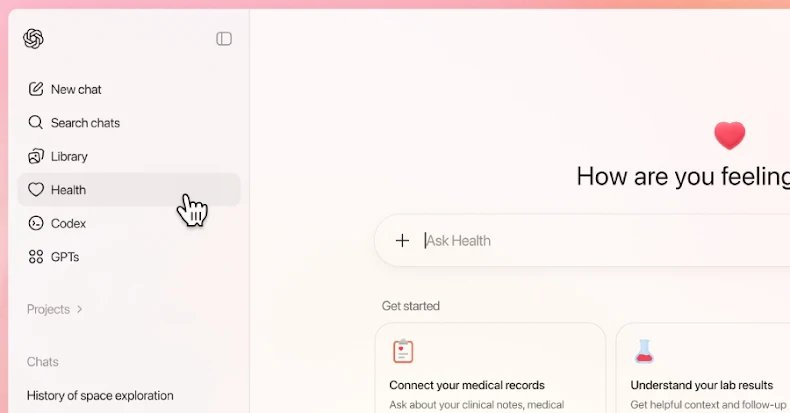

Synthetic intelligence (AI) firm OpenAI on Wednesday introduced the launch of ChatGPT Well being, a devoted area that enables customers to have conversations with the chatbot about their well being.

To that finish, the sandboxed expertise provides customers the non-compulsory capability to securely join medical data and wellness apps, together with Apple Well being, Perform, MyFitnessPal, Weight Watchers, AllTrails, Instacart, and Peloton, to get tailor-made responses, lab take a look at insights, diet recommendation, customized meal concepts, and steered exercise courses.

The brand new characteristic is rolling out for customers with ChatGPT Free, Go, Plus, and Professional plans outdoors of the European Financial Space, Switzerland, and the U.Okay.

“ChatGPT Well being builds on the robust privateness, safety, and information controls throughout ChatGPT with further, layered protections designed particularly for well being — together with purpose-built encryption and isolation to maintain well being conversations protected and compartmentalized,” OpenAI mentioned in a press release.

Stating that over 230 million folks globally ask well being and wellness-related questions on the platform each week, OpenAI emphasised that the device is designed to help medical care, not substitute it or be used as an alternative choice to prognosis or remedy.

The corporate additionally highlighted the varied privateness and security measures constructed into the Well being expertise –

- Well being operates in silo with enhanced privateness and its personal reminiscence to safeguard delicate information utilizing “purpose-built” encryption and isolation

- Conversations in Well being are usually not used to coach OpenAI’s basis fashions

- Customers who try and have a health-related dialog in ChatGPT are prompted to modify over to Well being for added protections

- Well being info and reminiscences isn’t used to contextualize non-Well being chats

- Conversations outdoors of Well being can’t entry information, conversations, or reminiscences created inside Well being

- Apps can solely join with customers’ well being information with their specific permission, even when they’re already related to ChatGPT for conversations outdoors of Well being

- All apps obtainable in Well being are required to satisfy OpenAI’s privateness and safety necessities, corresponding to gathering solely the minimal information wanted, and endure further safety evaluation for them to be included in Well being

Moreover, OpenAI identified that it has evaluated the mannequin that powers Well being in opposition to medical requirements utilizing HealthBench, a benchmark the corporate revealed in Might 2025 as a option to higher measure the capabilities of AI methods for well being, placing security, readability, and escalation of care in focus.

“This evaluation-driven method helps make sure the mannequin performs nicely on the duties folks really need assistance with, together with explaining lab leads to accessible language, making ready questions for an appointment, decoding information from wearables and wellness apps, and summarizing care directions,” it added.

OpenAI’s announcement follows an investigation from The Guardian that discovered Google AI Overviews to be offering false and deceptive well being info. OpenAI and Character.AI are additionally going through a number of lawsuits claiming their instruments drove folks to suicide and dangerous delusions after confiding within the chatbot. A report printed by SFGate earlier this week detailed how a 19-year-old died of a drug overdose after trusting ChatGPT for medical recommendation.